Digit Classifier

Summer 2019 was a machine learning bootcamp for me, and the digit-classification project using the MNIST dataset was the first 'proper' ML project I worked on.

I built the model using keras and performed some rudimentary hyperparameter search without even knowing what a hyperparameter was - I automated testing of several different model architectures using Google Colab. After trying five or so different architectures I settled on one that used two convolutional layers and some maxpooling / dropout layers as well.

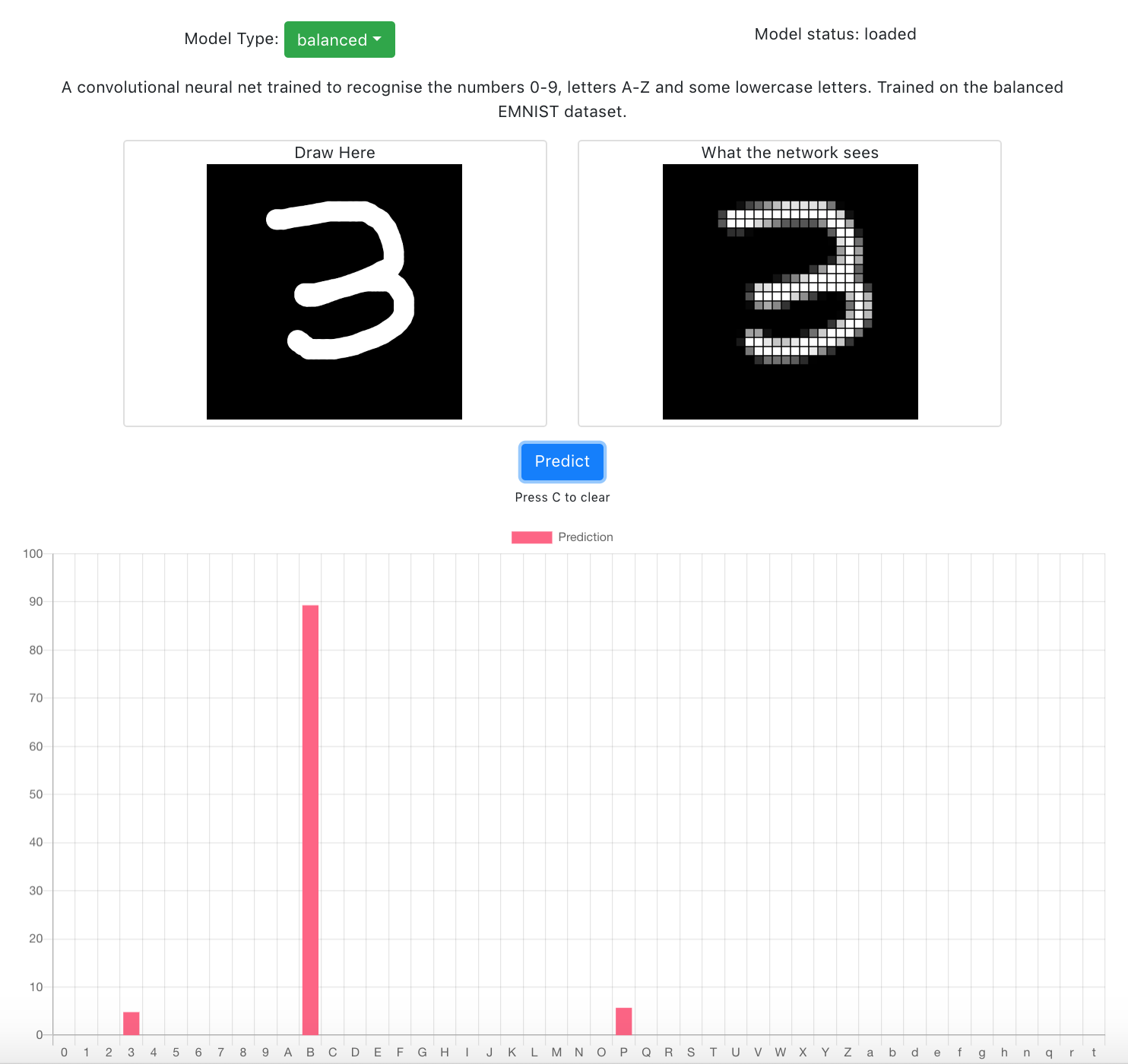

After some months of tweaking and improvements the interface has developed into a platform to showcase multiple different image classification models, ranging from one trained on the standard MNIST dataset to several trained on the extended EMNIST datasets.

The web interface was built using React and Bootstrap as well as p5.js for the canvas interactivity.

This project was pivotal for me with respect to machine learning, it was the thing that introduced me to Keras and Tensorflow, as well as a lot of the fundamental machine learning concepts like dataset normalization, optimizer choice, model architecture and hyperparameter search. It inspired me to learn more about the subject, which lead me to listen to the OCDevel machine learning podcast and then to complete the Andrew Ng Coursera course. Without this project I likely wouldn't be doing a lot of the projects I'm working on now, like the NEAB multiagent stuff or predicting stock prices.